Everything You Need to Know about Data Scraping

In an era where data drives decision-making and innovation, the ability to extract and analyze information from the web is more crucial than ever. Data scraping, a process that automates the collection of structured data from websites, has become a key tool for businesses, researchers, and developers seeking to harness the wealth of information available online. From tracking prices and monitoring market trends to gathering insights for academic research, data scraping enables users to efficiently compile large datasets that would be impractical to collect manually. However, as the practice grows in popularity, it also brings forth a host of legal and ethical challenges that users must navigate. This article delves into the intricacies of data scraping, exploring its definition, tools, applications, and the best practices necessary for responsible and effective use.

Key Takeaways:

- Data scraping is the process of extracting data from websites using automated methods, such as bots, scripts, or web crawlers.

- It involves copying content from websites and converting unstructured data into structured data.

- Data scraping is used for many purposes, such as price monitoring, market research, news monitoring, and lead generation.

- While data scraping can provide many benefits, it also raises legal and ethical concerns if done excessively or without permission.

- Best practices for ethical data scraping include respecting robots.txt files, avoiding over-scraping, using scraped content legally, and letting site owners know about your scraping activities.

What Is Data Scraping?

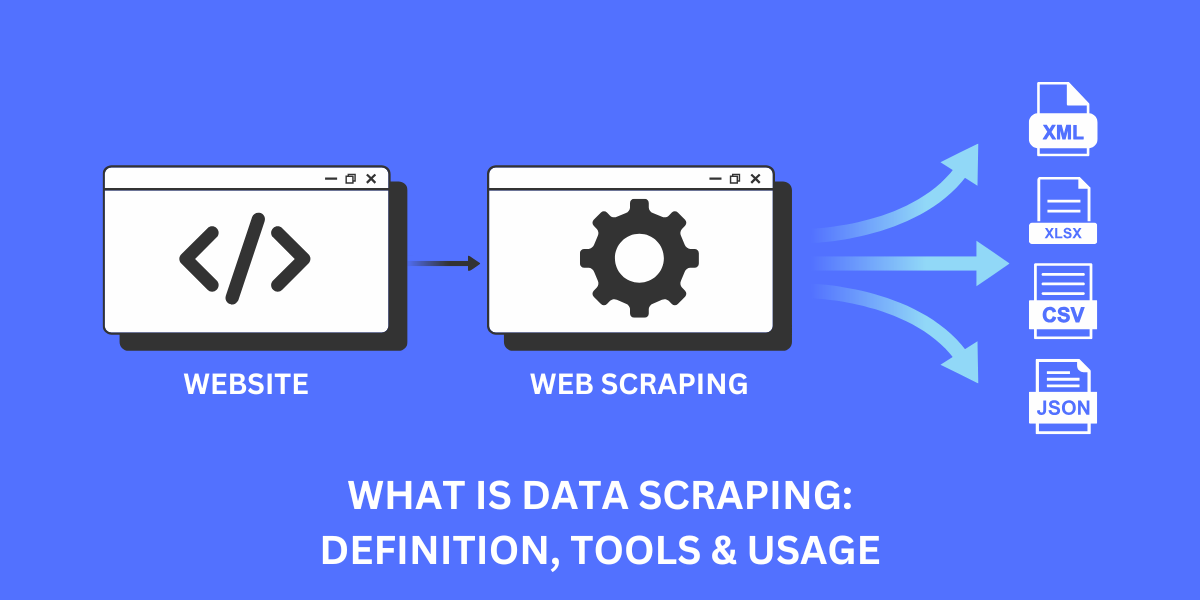

Data scraping, also known as web scraping, web data extraction, or web harvesting, refers to the automated process of collecting structured web data in bulk. It works by extracting the HTML code from websites and then analyzing and converting the unstructured data into structured, machine-readable data that can be easily processed and analyzed.

In simpler terms, data scraping involves using automated bots, scripts, or web crawlers to copy content from websites. This copied content is then organized into a readable format, such as a spreadsheet or API so that large amounts of data can be processed.

Data scraping enables the harvesting of vast amounts of web data that would take humans an infeasible amount of time to collect manually. As the volume of data on the internet continues expanding exponentially, data scraping is becoming an increasingly important tool for aggregating and analyzing web data at scale.

How Does Data Scraping Work?

The data scraping process typically involves three main steps:

1. Identifying Data Sources

The first step is identifying websites or online platforms that contain the data you want to extract. For instance, if you wish to price data, you would locate e-commerce sites or business directories that display prices.

2. Extracting the Data

Once data sources are identified, the next step is using web scraping tools or custom scripts to extract the target data from the websites automatically. This may involve techniques like:

- Parsing HTML: Analyzing page structure and extracting specific HTML elements like text, images, tables, etc.

- Text pattern matching: Using regular expressions to extract text matching a particular pattern.

- Analyzing page URLs: Identifying patterns in page URLs to extract data.

- DOM parsing: Navigating and analyzing a web page’s Document Object Model (DOM) to extract relevant data.

- Reverse engineering APIs: Reverse engineering REST or SOAP APIs that front-end websites use to display data.

3. Structuring and Storing Data

After extracting raw website data, the final step is structuring and storing it in databases or spreadsheets so it can be easily analyzed. This may involve:

- Cleaning and standardizing data into consistent formats.

- Structuring data into relational databases or NoSQL data stores.

- Exporting data into spreadsheet formats like CSV or Excel.

- Setting up automated data pipelines for ongoing extraction.

Why is Data Scraping Used?

There are several valuable applications and use cases of data scraping across different industries:

- Price Monitoring: Track product prices and price histories by scraping e-commerce websites. Competitive pricing monitoring is a common use.

- Market Research: Gather data on competitors, products, reviews, trends, and more from industry sites. Helps research markets.

- News Monitoring: Track news stories, headlines, and public sentiment by extracting articles and content.

- Lead Generation: Build leads lists by extracting business contact details from directories or other websites.

- Recruitment: Scrape job postings from multiple job boards to aggregate hiring data.

- Social Media Monitoring: Extract data from social media sites to analyze performance, trends, and public profiles.

- Research: Gather large datasets for analysis from websites. Common for academic research.

- Search Engine Optimization: Check rankings in search engines by scraping SERPs. Also, find backlink sources.

- Data Integration: Migrate data between websites or merge data from multiple sites.

- Personal Use: Automate tedious copying of public data for individual projects or analysis.

Properly used, data scraping can save immense time and effort compared to manually collecting data. It enables working with huge datasets from the web, opening up new possibilities for analysis and insights.

Is Scraping Legal? Ethical Considerations

While data scraping provides many benefits, it also raises some legal and ethical questions:

- Copyright: Scraper bots copy website content, so copyright law applies. Always check a site’s Terms of Service.

- Data Protection Laws: Laws like GDPR restrict the collection of private personal data without consent.

- Computer Fraud and Abuse Acts: Illegal, unauthorized access to computer systems can violate anti-hacking laws.

- Terms of Service Violations: Many sites prohibit scraping in their ToS. Read ToS carefully before scraping.

- Over-Scraping: Excessive scraping may overload servers and harm site performance.

- Distorted Data: Cherry-picking or unrepresentative scraping can distort statistical data.

- Data Privacy: Users may not consent to public-facing data being extracted and reused.

- Competitive Advantage: Scraping competitor data in unfair or deceptive ways to gain advantage.

To scrape ethically and legally, keep these principles in mind:

- Respect Robots.txt: The robots.txt file indicates if scraping is allowed. Follow it.

- Ask for Permission: Contact the website owner and ask for scraping access if possible.

- Check Terms of Service: Review the ToS thoroughly before scraping a website.

- Limit Frequency: Avoid repeatedly scraping sites excessively. Monitor performance impact.

- Use Data Responsibly: Only reproduce public data, respect privacy, and use data legally.

- Provide Attribution: When republishing scraped content, provide credit to the source.

- Obey Takedown Requests: If asked to stop scraping a site, do so immediately.

- Follow Laws: Ensure collection and usage of data comply with all applicable laws.

Overall, scraped data should be treated similarly to copyrighted data rather than as an open resource. Get permission when feasible, limit collection, and use it legally and responsibly.

Web Scraping Tools and Software

Many tools and libraries are available for automating data scraping projects:

Browser Extensions

- Scraper – Chrome extension for extracting tables and data on any web page.

- Data Miner – Scrapes text, tables, and images from web pages into an exportable spreadsheet.

- Octoparse – Visual web scraper and crawler with click-and-select data extraction.

Python Libraries

- Beautiful Soup – Popular Python library for web scraping using HTML/XML parsers.

- Scrapy – Full-featured framework for scraping websites and building spiders.

- Selenium – Automates web browsers for scraping dynamic or JavaScript sites.

- Requests – Simplifies making HTTP requests for accessing web pages to scrape.

JavaScript Libraries

- Puppeteer – Provides an API for browser automation and web scraping with Headless Chrome.

- Cheerio – Fast and flexible web scraping library similar to jQuery for Node.js.

- Apify – Scalable web crawling and scraping platform designed for JavaScript.

Other Programming Languages

- rvest (R) – Simple web scraping package for the R statistical language.

- Web Scraper (Java) – Open-source Java library for data extraction.

- Web Harvesting (C#) – Flexible .NET scraping library built on HtmlAgilityPack and AngleSharp.

SaaS Tools

- ParseHub – Visual web scraper with on-demand and automated data extraction.

- Import.io – GUI web data extraction tool with integrations and automation.

- Scrapinghub – Provides cloud-based web crawling infrastructure and services.

- Diffbot – API for turning any web page into structured data via machine learning.

For most scraping projects, a combination of a programming language like Python or JavaScript along with selector tools like BeautifulSoup offers the most control and customization options.

Data Scraping Best Practices

Follow these guidelines for well-structured, sustainable data scraping operations:

- Review Robots.txt and ToS: Check what data collection is permitted before scraping.

- Build Ethics Checks: Program scrapers should respect exclusion rules and data privacy.

- Use Sitemaps: Leverage sitemaps to identify all pages to scrape systematically.

- Scrape Sensibly: Limit request frequency and volume to avoid overloading servers.

- Maintain User-Agents: Use well-formatted user agents and throttling to mimic human visitors.

- Validate Data: Clean invalid records like empty fields to improve data quality.

- Store Data Securely: Follow cybersecurity best practices when storing scraped data.

- Retry Failed Requests: Use backoffs and retries to handle transient server errors gracefully.

- Regularly Refactor Code: Keep scraper code cleanly written and well-maintained.

- Create Reusable Scrapers: Build modular, configurable scrapers for reuse on future projects.

- Automate Ethically: Minimize accidental data breaches through automated safeguards.

- Follow Data Laws: Ensure data handling processes comply with GDPR, CCPA, and other regulations.

Overall, adopt high ethical standards and optimize the reliability, quality, and security of scraped data. Scraping sustainably also means having contingency plans if sites block scrapers.

The Future of Data Scraping

Data scraping is likely to keep growing in usage as more business operations come to rely on web data. Here are some emerging directions:

- Advances in JavaScript scraping – Headless browsers and tools like Puppeteer make JavaScript scraping more powerful.

- More focus on ethics – Expect more emphasis on voluntary standards and ethical scraping principles.

- Tighter data regulations – Regulations like the GDPR will drive stronger compliance practices.

- Increasing adoption in research – Academic researchers recognize web scraping’s value for gathering datasets.

- Anti-bot protection – Websites will use more sophisticated bot detection to guard against abusive scraping.

- Scraping-as-a-service – Managed data scraping services will simplify extraction for non-programmers.

- Better analytics integration – Tighter connections between scraped data and business intelligence tools.

- Hybrid scraping strategies – Blending browser automation, APIs, and HTML scraping for resilience.

Data scraping lets anyone access the vast amounts of data on the web—but not without ethical considerations. As scraping matures, best practices and regulations will ensure its sustainability as a vital data extraction tool.

Final Thoughts

Data scraping is a powerful and essential tool for extracting valuable insights from the vast amounts of information available on the web. While it offers numerous benefits across various industries, it is crucial to approach scraping with a strong ethical framework and a clear understanding of legal implications. By adhering to best practices, respecting website policies, and ensuring compliance with data protection laws, individuals and organizations can harness the potential of data scraping responsibly and effectively. As technology and regulations evolve, staying informed and adaptable will be key to leveraging web data while maintaining ethical standards.

Frequently Asked Questions (FAQs) About Data Scraping

Data scraping is an immensely valuable technique for extracting insights from today’s vast online data sources. But for many, the world of web harvesting remains unfamiliar territory. Here are answers to some common questions about data scraping:

Is web scraping illegal?

It depends. Check a website’s Terms of Service and robots.txt file. Scraping public data is often legal, but scraping private/copyrighted data without permission may violate laws. Always get permission if possible.

Can you get in trouble for web scraping?

You can if done unethically. If scraping is abusive, copyright infringement, violating terms of service, and hacking laws and regulations like the CFAA and GDPR can lead to legal trouble.

Is using scraped data plagiarism?

Not necessarily. While copyright laws still apply, it’s usually OK to use properly attributed/reproduced public data. But always exercise caution and cite sources.

Does web scraping damage websites?

Excessive scraping can overload servers and impact site performance. To scrape responsibly, use throttling, backoffs, and reasonable request volumes.

Is screen scraping illegal?

No, screen scraping is a common, legal method of extracting data from websites not exposed through APIs. The same good practices for web scraping apply.

Can you scrape data from Facebook?

Facebook’s Terms of Service do not allow scraping their site via automated means, so it’s best to avoid scraping Facebook without explicit permission.

Is Adsense scraping illegal?

Yes, Google AdSense expressly prohibits scraping ads or site content from its network, which may result in your account being banned or terminated.

What are the risks of web scraping?

Key risks include getting sued for legal violations, servers blocking scrapers, distorting data, scraping and reusing private data unethically, or having your scraping efforts copied by competitors.

Should I learn web scraping?

Yes – web scraping is an invaluable skill. With so much data online, the ability to harvest, analyze, and extract value from that data programmatically is extremely useful for businesses and analysts alike.

Jinu Arjun

Verified Experienced Content Writer

Verified Experienced Content Writer

Jinu Arjun is an accomplished content writer with over 8+ years of experience in the industry. She currently works as a Content Writer at EncryptInsights.com, where she specializes in crafting engaging and informative content across a wide range of verticals, including Web Security, VPN, Cyber Security, and Technology.